Deep Learning In Autonomous Vehicles / ADAS

Autonomous vehicle operation:

- Perception

- Localization

- Path Planning

- Control

Computer vision (Perception) learning requires a lot of visual data, which can be easily generated by real-time 3D engine software

from virtual scenarios.

Virtual scenarios can be used for pre-validation of autonomous systems behavior (Control) as well (virtual test driving).

Requirements for virtual 3D environments:

- Photorealistic image quality

- Physically-correct lighting

- Multiple wide-angle surrounding cameras

- Sensor simulation (LIDAR, radar, sonar…)

- Access to semantic data of objects (labels)

- Procedural scene configuration (vehicle/pedestrian positions, obstacles, lighting, weather conditions)

Compared to conventional approaches (manual dataset gathering and labeling, vehicle testing tracks), using a 3D engine requires less time, effort, and resources.

Photorealistic Visuals

- Physically-based rendering

- Energy conservation model

- Dynamic lights, shadows, and reflections

- GGX BRDF: realistic speck from light sources

- Fresnel reflections, reflections on rough surfaces

- Screen Space Ray Tracing Reflections (SSRR)

- Configurable anti-aliasing algorithms

- Unique Screen-Space Ray Tracing Global Illumination (SSRTGI) technology

Physically-correct visualization of the learning dataset = reliable computer vision for real roads.

Virtual Cameras & Sensor Fusion

Surround Cameras

Multiple surround cameras can be implemented with linear or fisheye (panoramic) views. Monocular / stereo dash cam setups for ADAS are also easily configurable.

LiDAR

360° scanning laser sensor is supported. Distances to the surrounding objects can be fed into AI algorithms in real-time, regardless of lighting conditions.

Radar

Short-wave radars can be imitated with fast access to the scene depth data. The same precise data can be used for short-range sonar imitation.

Special-Purpose Sensors

UNIGINE 2 Sim can be used to imitate other types of special sensors, e.g. thermal, night vision, and infrared ones.

Georeferenced Scenes

UNIGINE 2 Sim SDK is built for the correct virtual representation of the real world, at scale.

- Increased object positioning precision: 64-bit precision per coordinate

- Support for 3D ellipsoid Earth model (WGS84, other coordinates systems)

- Support for geodata formats (elevation / imagery / vector)

- Ephemeris system for celestial bodies positions depending on time/coordinates

- The performance-optimized object cluster system

Automatic Data Annotation

UNIGINE 2 Sim scenes already contain the classification information for each frame, making them an auto-labeled ML dataset. Ground truth data can be easily accessed for each pixel. Unlike manual tagging, there is a 0% classification error.

- Per-object property system for semantic data

- Easy object masking

- Extensive access to the scene graph

- Fast object visibility checks

- Efficient occlusion control

- 24-bit material masks

- Object bounds info for segmentation

- evaluation

Easy Scenario Reconfiguration

You can get an unlimited number of scenarios by changing any of these variable parameters, which are dynamically controlled via API:

- Autonomous vehicle position

- Surrounding traffic

- Pedestrians

- Obstacles

- Accidents

- Road condition

- Lighting conditions

- Weather conditions

The increased number of situations and test cases explored in this way should improve system reliability dramatically.

High Performance

UNIGINE 2 Sim was designed to handle large, complex procedural scenes, filled with dynamic entities.

The 3D engine demonstrates high and stable performance even working on consumer-grade hardware. This proves useful and time-saving when it comes to iterative AI training.

There is the multi-years close cooperation of UNIGINE with leading hardware vendors (AMD, Intel, NVIDIA) on performance optimization.

Vehicle Dynamics Simulation

UNIGINE 2 Sim SDK includes a generic vehicle dynamics system, which can be fine for background traffic or prototype applications (before you bring

more sophisticated software algorithms or hardware-in-the-loop simulation in).

- Main vehicle systems: engine, gearbox, transfer case, axles, differential, wheels, suspension, steering, brakes

- Configurable drivetrain: FWD, RWD, 4WD, multi-axle vehicles

- Simulation of various surface conditions (dry, wet, snow-covered, or icy road, mud, and so on)

- Visual control/debug of parameters in real-time

There are also essential built-in components for traffic simulation (spatial triggers, pathfinding module).

Powerful C++ or C# API

Support for both C++ and C# programming languages provides decent flexibility for development teams. Both APIs are identical in terms of the access level:

- Deep access to the rendering pipeline

- Flexible multi-viewport mode

- Extensive access to the scene graph and all parameters

- CUDA support for fast GPU-CPU data transfer

- Raw texture access

- Extendible design for custom objects and shaders

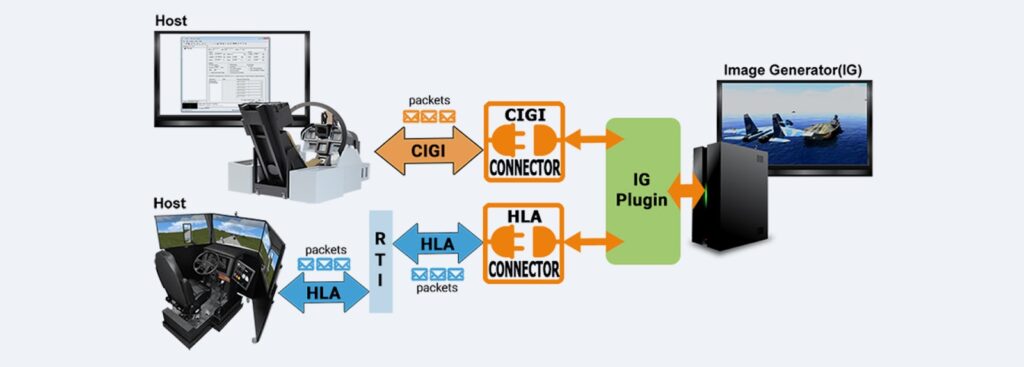

Proven By Training Humans

UNIGINE 2 Sim has been proven for years in building professional simulators to train people. The SDK was designed to work as a part of modular distributed systems.

There are a lot of common tasks in generating virtual 3D environments for humans and AI.

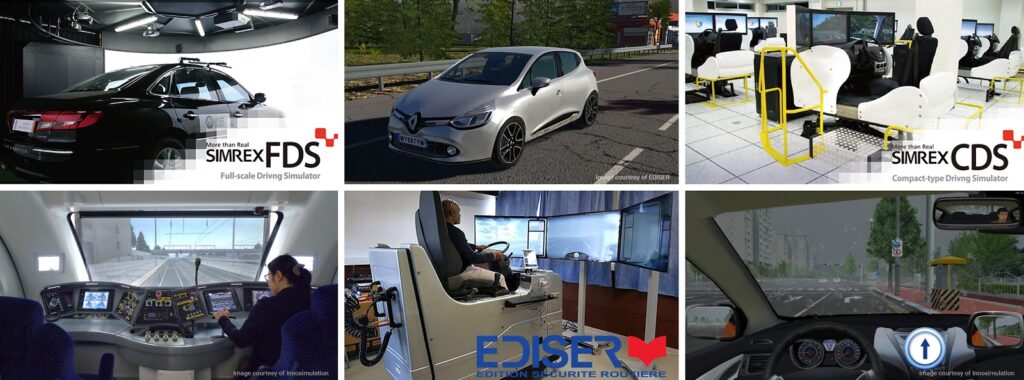

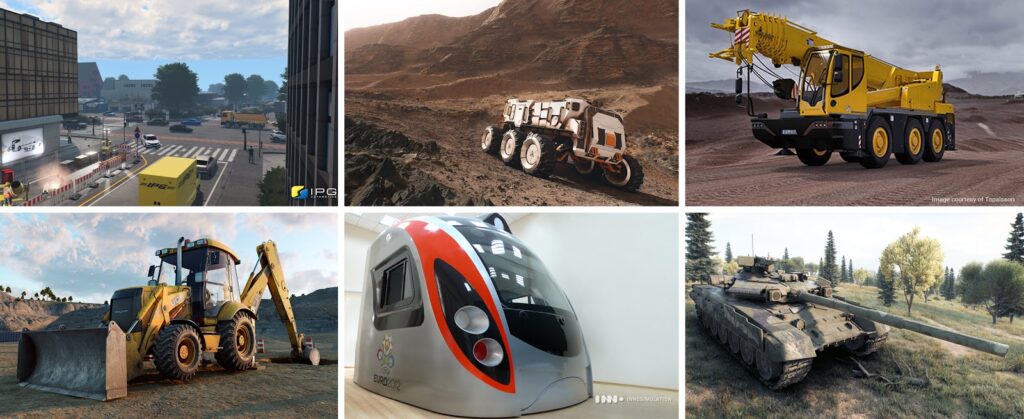

A great variety of driving simulation systems created by our customers are installed worldwide. Cars, trucks, special vehicles, trains, military vehicles – all sorts of ground transportation simulators are powered by UNIGINE Engine.

Various Types Of Vehicles

Streamlined Content Workflow

- WYSIWYG 3D scene (visual database) editor

- Landscape tool with support for procedural data refinement

- PBR workflow for 3D assets (compatible across modern engines)

- Support for CAD and GIS data formats

- 3D content library

Team Of Experts

UNIGINE team has been working with simulation & training tasks for more than ten years (and AI training-specific tasks for four years), having directly participated in many projects, receiving hands-on experience ourselves, and realizing many turnkey projects.

All our experience was converted into UNIGINE 2 Sim software platform; so, our clients can use ready-made components that have been developed especially for solving similar tasks.

Our technical experts are always here to help your team with any questions.

UNIGINE Advantages Summary

- Photorealistic image quality

- Camera and sensor output

- Automatic semantic data labeling

- Easy scenario reconfiguration

- Deep access to the rendering pipeline

- Embeddable into C++ / C# codebase

- Extremely performance-optimized

- Georeferenced scenes support

- A large number of out-of-the-box features

- Visual scene editor + 3D content library

- Generic vehicle dynamics simulation

- Support for various types of vehicles

- Proven in human-oriented simulators

- Brought to you by experienced, enterprise-oriented experts

Autonomous Everywhere

Regardless of the type of autonomous vehicles, their AI should undergo a training process, facing all the same common challenges,

before they hit mass deployment:

- Self-driving cars (SAE Level 4/Level 5)

- Autonomous flying drones and UAV

- Maritime autonomous surface ships and submarines

- Autonomous spacecrafts

UNIGINE 2 Sim is capable to visualize all of these scenarios.

Developers from 250+ companies across all the continents use UNIGINE technologies to realize their projects.