We returned to the blog with a story about the latest release. The UNIGINE team in April released the new Superposition GPU benchmark with VR features. We filled up new cones, invented a couple of dozens of sudden rescue solutions and new technology in 3D graphics.

Inside the post there is a lot of beautiful rendering, several dramas with a technical bias, 4.5 artists, evil Valve moderators, upset AMD, NVIDIA and Intel, and operating room commits. Come on in!

In the beginning there was a demo

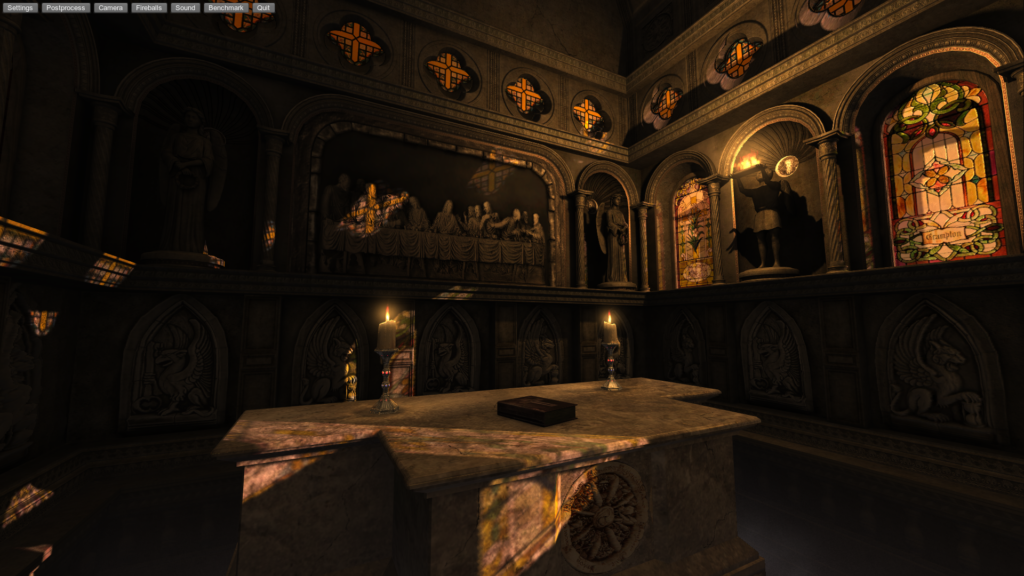

We released our first benchmark 10 years ago, in 2007. Then we made the next 3D demo, which turned out to be so demanding that it worked only on top-end video cards at that time, the rest could not cope, slowed down and overheated. They began to think what to do with it? And they decided: since the demo loads the iron so, why not use it? What can it be useful for? So, the first benchmark, Sanctuary, appeared from a by-product, and UNIGINE has another direction. The 2007 graphics are no longer impressive, but cause nostalgia.

Who needs benchmarks and why?

GPU benchmarks have a rather specific, but constant audience. Basic free versions run in tens of millions of copies. Overclockers need them to compete in squeezing performance out of iron. Gamers should evaluate whether their video card will pull the latest hit toy. Iron manufacturers need benchmarks as a “line” that can measure the results of development. For computer shops and repair shops, the benchmark is useful as an output control tool for the stability of a computer assembly. We released the third benchmark (Heaven) in 2009, and it is still used by millions of people around the world.

Why do they need us? Since the benchmark has the task of loading iron to the maximum, we, without any hesitation in money, show the level of graphics that can be done on the UNIGINE engine, and what will become the standard in 3D graphics tomorrow and the day after tomorrow. So benchmarks are also a very clear demonstration of technology, and additional marketing. The most common phrase of customers who came for the engine: “Ah, I know your benchmarks, I have been using them all my life!” However, there is a minus: some people think that the benchmark is just an application on the brake engine (and this is far from the case). We have to explain that the extreme load – this is what is intended.

And thanks to benchmarks, we work closely with manufacturers of graphic processors, so there is always UNIGINE optimization in the video drivers inside your computer. It’s good for everyone: vendors have a reliable performance test, and our developers have access to tomorrow’s hardware. Naturally, there is a fly in the ointment: vendors often hate us. It seems to everyone that on our benchmarks their video cards have poor performance, while the competition is too high. So NVIDIA periodically believes that we sold to AMD, and AMD that we sold to NVIDIA.

Conceived the demo, and then it started

The project, which eventually turned into the Superposition benchmark, began in March 2016. We wanted to complement the SDK of the UNIGINE 2 engine with a new interior 3D demo. Why exactly the interior?

We have accumulated many demos with large spaces from horizon to horizon. Therefore, some customers began to perceive UNIGINE 2 exclusively as an engine for planetary-scale scenes. He really does an excellent job with them, but he knows how to make large detailed plans no worse. Here is a project that would show all the capabilities of the engine when working with short-range plans, we as a part of UNIGINE 2 SDK just did not have enough. On the UNIGINE 2 engine, in real time, you can create scenes up to the size of the solar system.

Tube setting

We immediately decided that we did not want to make a modern interior design: too sterile, too visually boring. Yes, beautiful. But with the next licked picture, the eye just slides off: oh, interior, we scroll further.

We were going to make our demo scene want to be examined and studied. To do this, it’s not enough just to fill the space with objects and make a cool render. In order for the room to be interesting to “stay” and examine it carefully, a story should be behind it.

So, the artists needed to come up with a concept and setting. That the room was both unique and recognizable. Since our engine, and even more so benchmarks, are used from Australia to Scandinavia and from Singapore to California, we needed universal images that were understandable in any country and culture. And such that at first glance the fantasy would be played out and ask for supplements.

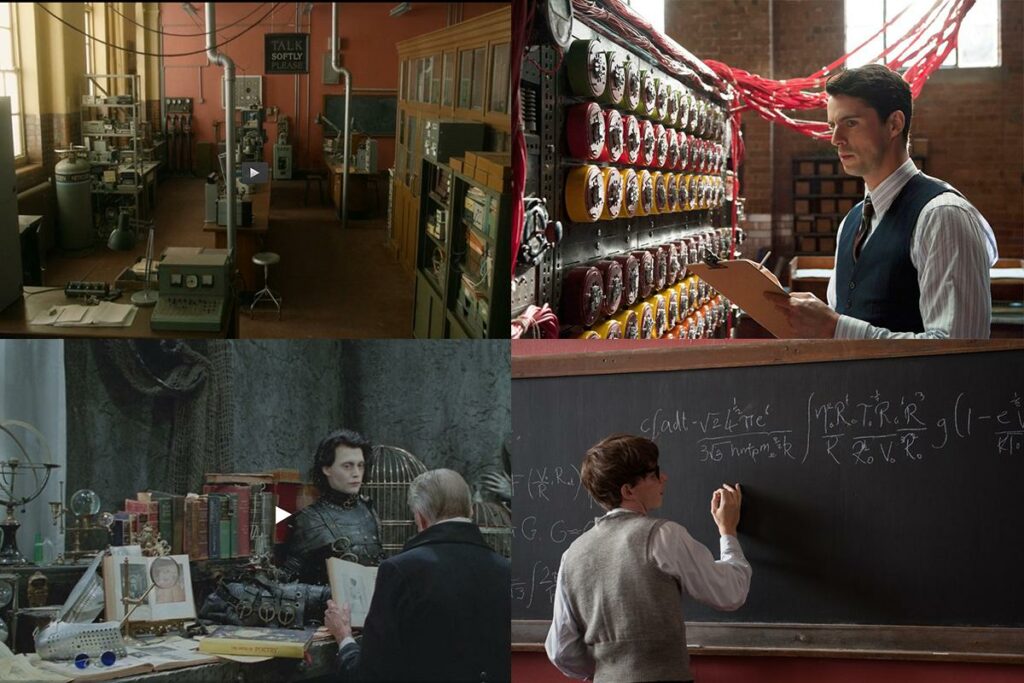

The first refs for the concept of the future benchmark were collected from films: science fiction and films about prominent scientists of the 20th century.

Cinema visual refs were best suited for an international product: everything made in Hollywood is known to everyone and everywhere. We have already decided that the “room” will be a classroom or laboratory. So the artists revised the sea of films where they study or conduct experiments: from “Edward Scissorhands” and “Flies” to the “Simulation Game”.

The style of scientific laboratories of the 40-50s was most of all hooked on: the boom in the development of science and the romance associated with it, which at the same time appeared as a genius scientist who spent days and nights at work. So we decided on the setting: we are making a classroom-laboratory from the 1950s. A team of artists gathered a rough first scene from the primitives and sat down to create content. Pre-production: outline composition of the scene.

Artists were given complete freedom and 3 months of time. The main thing that was required: to produce the highest quality and content-rich scene. Although the prospect of turning the demo into a benchmark was immediate, while the task was limited to a static scene without logic, but with a large number of different objects. At the pre-production, it was considered that we needed to make about 120 unique items. For a period of 3 months using 4 people, the task was difficult. But not impossible. So the laboratory looked in the second month of work. So she began to look six months later, in October.

And not a telescope at all, but a very portal

It was the second half of the second month. Our laboratory is overgrown with details and more and more becoming like itself. But she still lacked the very smallness – the central object. The leading 3D artist and art director of the entire Davyd project was responsible for the concept and production of the “protagonist”.

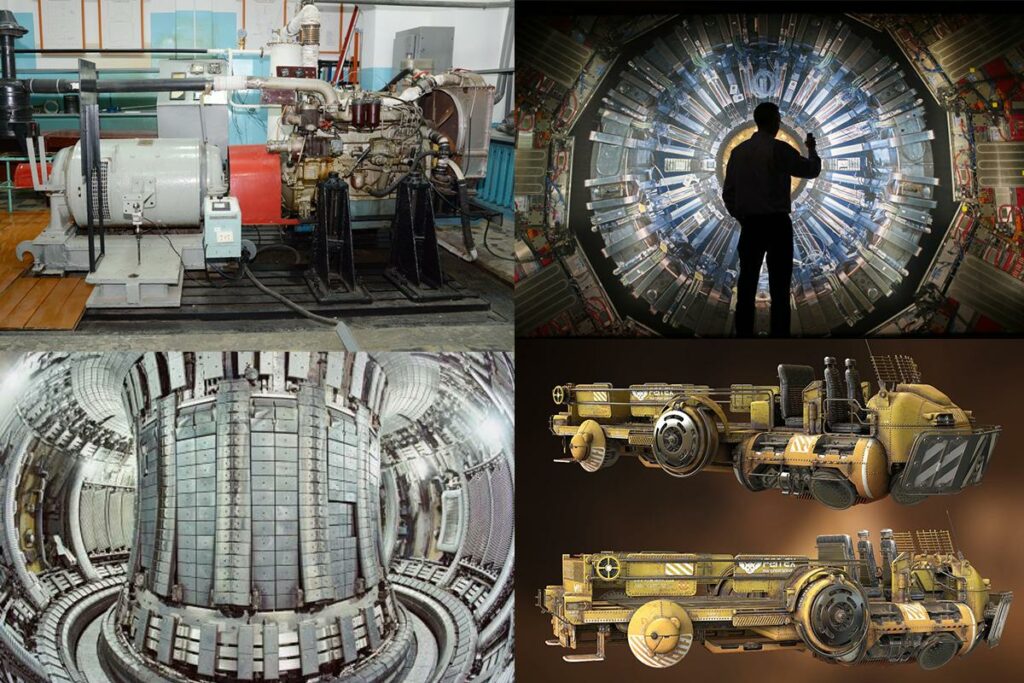

For design development, many references were collected: laboratory installations, machine tools, robots, sci-fi units. The number of files in the classroom \ refs \ center_object folder was growing. Initially, the idea was to make a teleport from small objects. Two coasters, with one object disappearing, and teleported to the other. But after a couple of days of persistent blocking (as artists call the stage of constructing a silhouette from primitives), the result still did not suit. Everything invented did not fit into the geometry of the room. The central object either had to be placed in the very center of the room, and then it looked like an altar, or somewhere in a corner, but in this case the object ceased to be central.

There were a lot of ideas of the central object, more visual references.

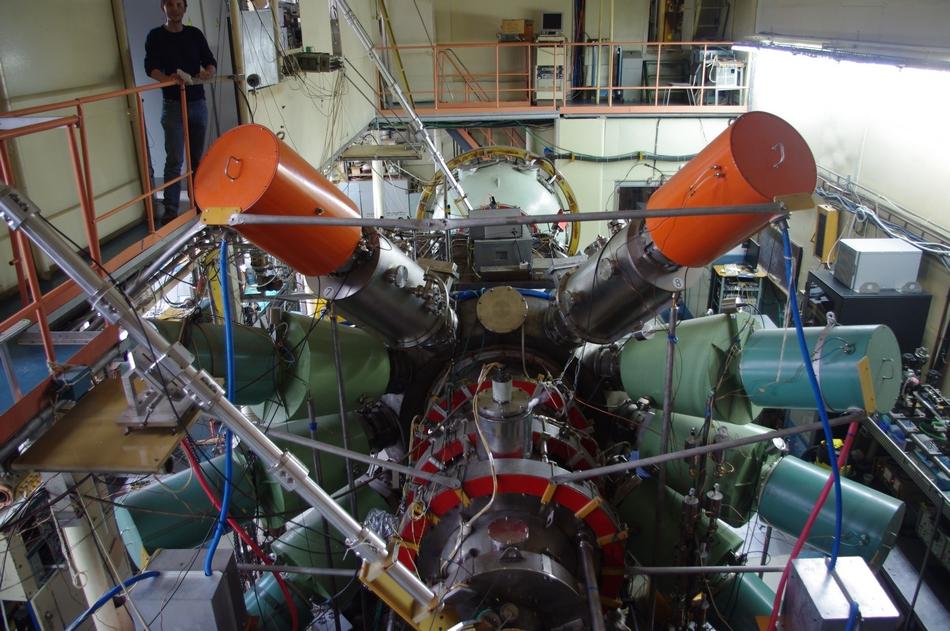

As a result, Davyd came to the conclusion: it is necessary to try symmetric geometry. Rummaging through the references, I found a photograph of this symmetrical aggregate with colored protruding “horns”. In some ways they resembled the spikes on the head of the Statue of Liberty, and at that moment the image “clicked” right in front of our eyes: we need a large, non-detailed thing with spikes on the “head”. Yeah, we’ll plant the doors in front, so that a person can enter there and somehow settle down inside. And to make it more convenient, we make a slight slope. And back you need “meat” – cylinders, tubes, wires, and always asymmetric! An idea and an image developed, it only remained to sit down and do it.

This photo helped to finally come up with a portal from Superposition.

As we learned after the release, this photo shows a gas-dynamic trap at the Novosibirsk Institute of Nuclear Physics. They say that you can go on an excursion to the institute. So if you are from Novosibirsk, you have every chance to see the prototype of our portal live.

By the way, we wrote that we had 4 artists, but actually there were 4.5 of them.Closer to winter, when the number of objects in the laboratory was approaching 700 (and they were all interactive!), We got a freelance remote artist from the mining town of Prokopyevsk. Stepan, a classmate of our lead over Davyd’s artists, started to “model something” with him back in school, but then abandoned him. And now I decided to catch up.

“Maybe you need to model something there? And then I’ll die here, ”Stepan wrote from somewhere in the Kuzbass winter. He was ready for the sake of interest and pumping skills to deal with small objects. Rulers, scissors, compasses, pens, pencils, erasers, a whole sea of other trifles important for the atmosphere of a working mess of the laboratory – everything was done by Stepan’s hands. So in the release version of Superposition objects became more than 900.

We will make a new benchmark, with VR and interactive

Three months allotted for the production of content resulted in four. The planned scene was ready. All that remained was to add logic and interactivity to a minimum – and that’s all, you can send to the SDK!

But two things influenced the final transformation of the demo into a benchmark. By September there were already 600 objects in the scene, and she was successfully doing what was required of the benchmark: properly loading the GPU. Second: Sasha, a game logic programmer, joined the team. All other programmers were painted on projects, but now we had one more person with the necessary skills to create an interactive part.

I have been wanting interactivity for a long time, because until that moment all UNIGINE benchmarks were somewhat static. You can walk along Heaven and Valley, but no more. Because of this, we often came across the opinion that in the UNIGINE engine everything is static. Do you want interactivity? It will be interactive to you!

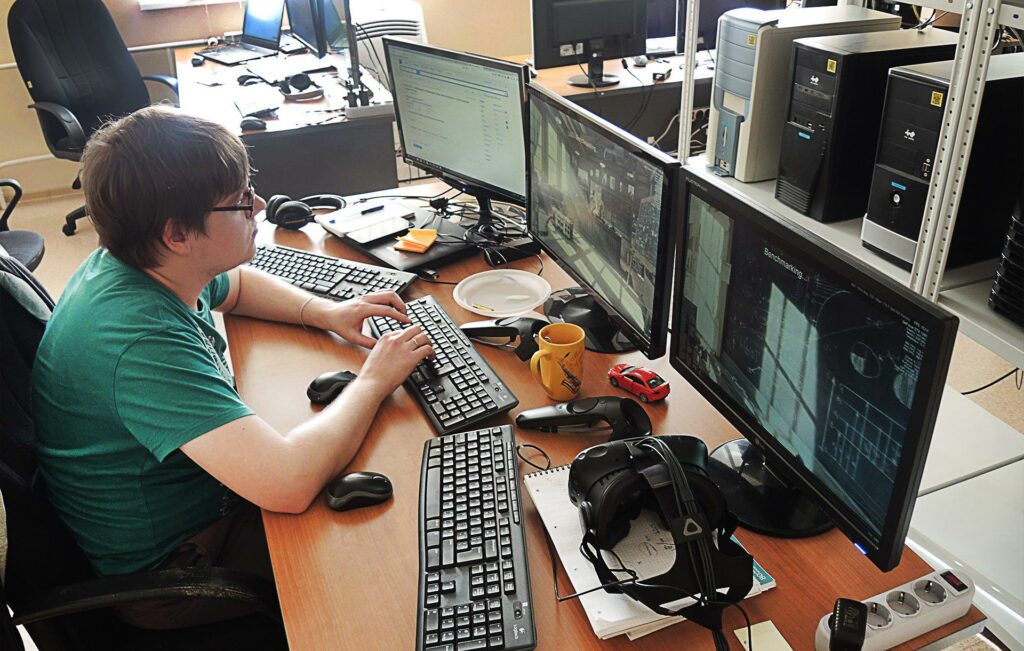

In parallel, there was a boom in mass VR. HTC Vive came out, by Christmas Oculus Touch was supposed to appear. For customers of the UNIGINE 2 engine, we have added VR support for a long time – with the same Oculus Rift engine is compatible since 2013. But UNIGINE has not yet had a publicly available VR product. And in our laboratory room with a bunch of objects and a legend about Professor VR, this begs for itself.

So, by the fall, the task has become more complicated: it will be a benchmark with interactive mode, VR support and the most advanced graphics, so that video cards last for it until 2020. Hooray, work again!

Due to the sudden VR, a problem arose: what looked normal on the monitor in virtual reality looked distorted or disproportionate. For example, the teaching table was too large: “Yes, you can carve a horse on it!” – said the artists when they looked at the stage through a helmet. And again sat down for the content, adapt everything for VR.

I had to spend a lot of time interacting with objects in VR: the controllers do not have feedback, but you need to give the user a realistic feeling of different objects in their “hands”. Throwing objects is also a non-trivial moment, done in several iterations (difficulty in determining a realistic throw vector). There are already good articles about this, for example, this one is the original and translation on Habré .

Moving in VR is also special. For example, experimentally, we found out that it is better to rotate the camera discretely when turning the buttons, rather than smoothly speed it up – so the user cradles less. Fortunately, with the teleport in VR applications it is already more or less standard, I took aim and jumped.

The first “field trials” of the Superposition benchmark took place at the Tomsk IT conference in November. There, without departing from the stand, two new features were programmed: a hat and a cigarette became interactive.

Steam drama

When all the performance characteristics of the benchmark were finally formulated, we decided to try sending the release on Steam through the Greenlight system. From past experience with Heaven, which was given the “green light” when it was no longer needed, we knew that the Steam software section was such a black box with the illusion of democracy. It seems that users vote, but Valve moderators decide anyway. Moreover, the timing and selection criteria are unknown to anyone. Even if you are six months in the first place, the passage of Greenlight is not guaranteed. The Superposition took off right away: in the first two weeks of the vote, the benchmark received 95% of the votes “for” (the usual rating of projects on Greenlight is about 40%). But it turned out, it doesn’t matter.

Before the New Year, we happily ordered pizza in honor of the first place in the Greenlight race: out of 2,000 participants of Superposition on Steam, they waited the most.

And almost immediately after the New Year holidays, we received a letter from the moderators that they removed us from the vote. It turned out that just before applying for Steam introduced new rules. Now the benchmark did not fall into any category of software allowed to participate in Greenlight. Having decided that there is never much festive mood, we dressed up the portal. But – they rejoiced early. Steam exploded with malicious comments on Valve, newspapers wrote about this

wrote on the forums. But Valve did not give up. Which turned out to be not so bad: later we found out that launching through the Steam application slows down the system and spoils the performance. Not too much, but for professional overclockers, any performance error is critical.

I will guess this video card in four numbers!

Four years have passed since the release of Valley, and the algorithm recognizing the type of GPU and other characteristics of the system needed to be seriously updated. The programmer Tolya sat down to develop an updated detector. He joined UNIGINE on his second attempt and was actually a web programmer. But by the second run, he pulled C ++ to the level of “I can, practice,” and voluntarily took up the upgrade of the detector to pump it even more.

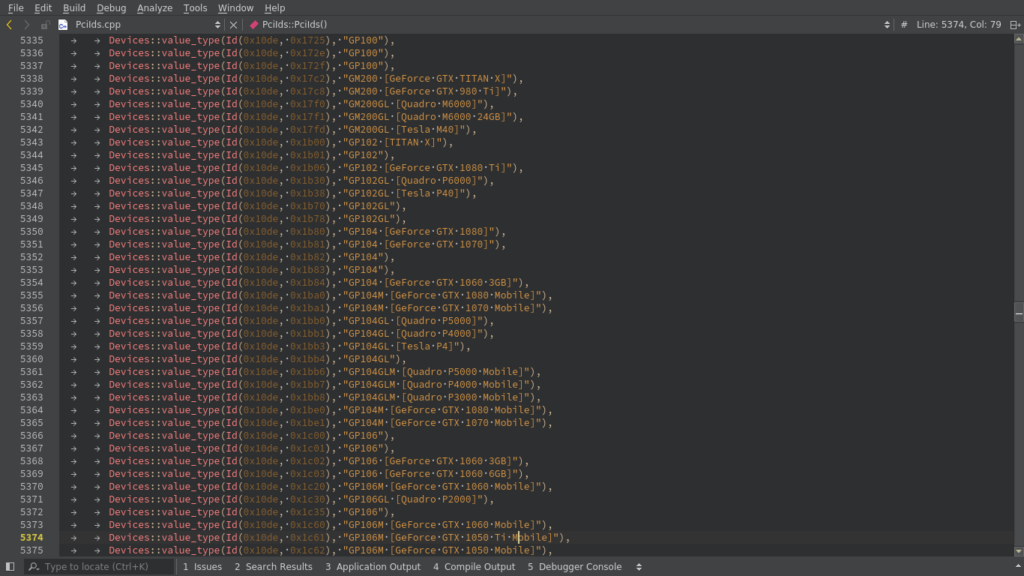

Where does the GPU data come from? Four numerical IDs are sequentially sewn on each video card: vendor, chip vendor, sub vendor and sub device. The easiest option is to pull them from the API to the driver. We take the ID, compare with the base of iron, we get the exact and full name of the video card. But this is the option “You are lucky.”

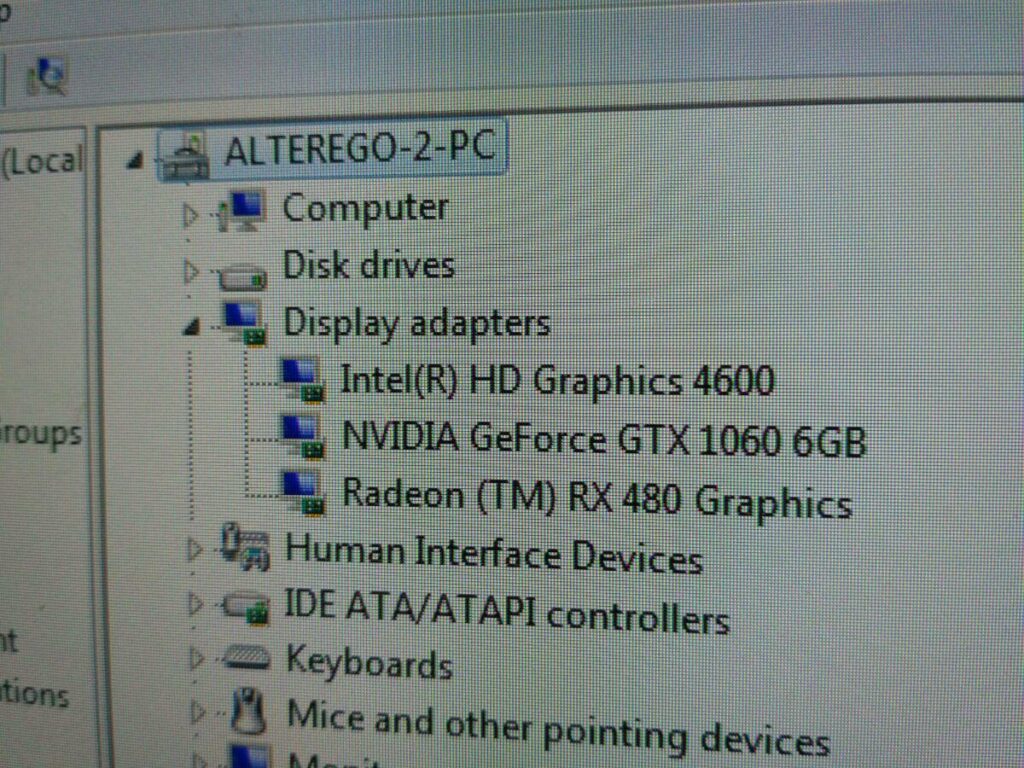

Even one video card is not easy to recognize, but what if three of them are different in the system? The answer will be below.

It’s just that you can only get the ID from the driver API on Windows. The drivers are proprietary there, of which our detector extracted the necessary data painlessly. On Linux, the zoo has many more drivers. There are also proprietary drivers there, but Linux users love everything open, so there are many options. Tolin’s life was even more complicated by the fact that in most Linux “firewood” there are no necessary APIs at all and the video card configuration cannot be pulled out into several lines of code. We have to get floridly.

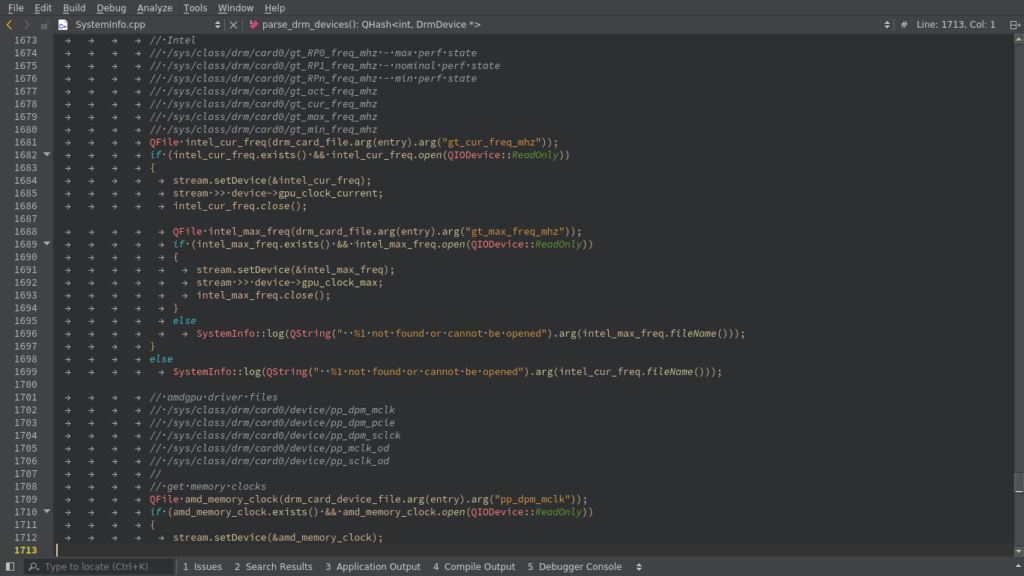

On Linux, the detector needs to climb into the files resulting from the operation of video drivers. Depending on the model of the card and “firewood”, these files are stored in different places and are named differently. A pack of detector code knocks on system commands, temperature sensors and other places, finds files and, like a good cop, extracts information from them. It is especially difficult to do this without the root access level. A snippet of the same detector code that fetched data for us under Linux. But what if there are several video cards in the system from different vendors? To understand, in principle, which video card is active, Tolya wrote a piece of code for 300 lines, which, in parallel with the benchmark, made snapshots.

First of all, the code checked the recycling of the video card (the idea of recycling was suggested by Misha, a leading graphics programmer). Empirically found that even on a turned off video card recycling can be from 1 to 10%. Therefore, it was necessary to set a value of 15%, if recycling came out more, the video card was recognized as active.

But the measurement of recycling was not obtained on all video cards. Therefore, I had to look further. The code looked into the Windows drivers and into the back streets of Linux and every 200 milliseconds asked: “Hey, how are you doing with the temperature and frequency?” At the end of the run, it became clear at the minimum and maximum of these parameters which video card was active. Although “nuances” also appeared here. For example, you had to consider that if the video cards are nearby, one can simply heat the other. So, the algorithm did not consider drops to 2 ° C as changes.

From a bunch of “private” examples. On some AMD cards, vendor ID 1002 was returned not in the hexadecimal system, as it should, but in decimal. In this case, the video card was not detected at all, and the detector returned the result “0 video cards”.

For each such particular algorithm had to file. Considering that in the database of surviving video cards that we have collected, there are more than 9000 options, a little more details came out than a lot.

In general, the definition of iron is a non-trivial task, and almost nobody really knows how to do this cross-platform. You can, of course, license the CPU ID SDK libraries (as you did in 3dmark), and the GPU-Z SDK, but they only work under Windows.

When, after three weeks with the inhuman efforts of Tolley, the detector was ready and we checked with it everything that we could reach, it became clear that this was a drop in the ocean of all the variety of GPUs. Attention, the question is: where to get a lot of different iron in order to test on any decent sample?

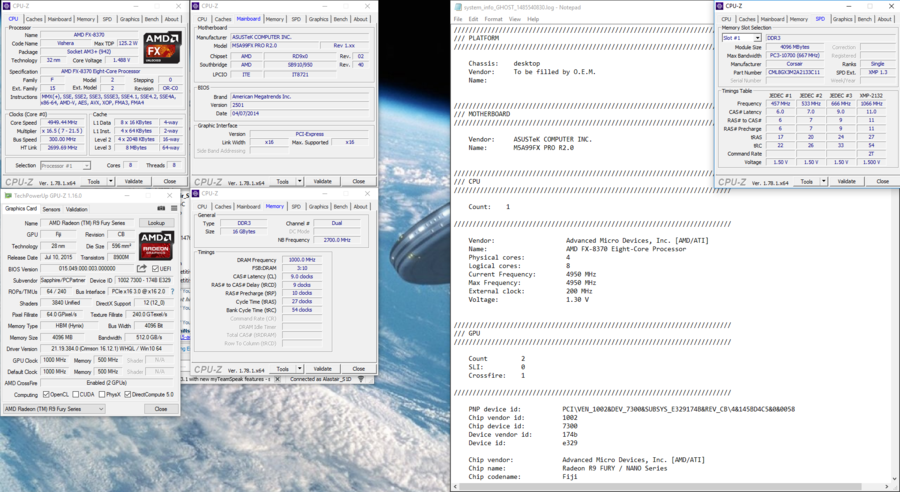

Full internet configurations

And we decided to declare open testing. Two detector assemblies for Windows and for Linux, respectively, were scattered around the forums and the community with a request to help with the tests. Those who wanted to participate in the development needed to run a detector and send us the resulting logs and screenshots of CPU-Z and GPU-Z, as the only intelligible standard.

Have you ever asked strangers in a forum to download and run an unidentified * .exe? Give it a try! “Don’t start, there is a virus!”, “Yes, it’s mining bitcoins!”, “Doctor, it determined the serial of my motherboard!”, “Yes, it’s some kind of Russian. What, you want to say, do Russians work at UNIGINE? Clearly phishing, don’t get fooled! ”

Open testing is awesome. But when you have more than 400 such screenshots, you have to sweat.

We were banned, we were banned, a PR man corresponded with the moderators, proving that this is not a camel, but really UNIGINE. Then we wrote in official social networks: calmly, comrades, testing is ongoing. The wary public realized that it was really us. And that you can participate in the development of the new UNIGINE benchmark.

And the real Log Rush began. Andrei, the Superposition project manager, scooped up mail for days, answered emails, and downloaded user logs scattered across forums and social networks. As a result, 450 results were collected, with the help of which it was possible to calibrate the detector. And volunteers still continue to send their logs sometimes.

Want some new tracing?

The benchmark originally wanted to be released at the end of 2016, but the release moved to the first quarter of 2017. First of all, Christmas fever is not the best time to release a mass product. Secondly, the priority for us is, after all, the engine and non-shifting release of the UNIGINE 2.4 SDK . But now the time has come to add a new tracing technology to Superposition – SSRTGI (Screen Space Ray Tracing Global Illumination), which was worked together by Misha, one of the programmers in the engine, and Davyd, a leading artist. Lighting without SSRTGI // Lighting with SSRTGI on

The usual screenspace effects of SSAO and SSGI do not use tracing. Yes, they make a 3D picture more like reality, but cheating: they try to reproduce a picture that looks like a real one, but not at all how real lighting works. This helps to some extent, but the difference is still visible at a glance.

We made a technology based on the principle of ray tracing in the real world. SSRTGI takes into account the movement of rays, reflection from objects, obstacles, shading and aligns the normals in the transitions between objects. As a result, we got a picture that is comparable in quality to an offline render, but in real time. Objects remain interactive. Each can be moved as you like, and he will not be abruptly knocked out of the rest of the picture, as is often the case in games.

Lighting without using SSRTGI // Lighting with SSRTGI turned on

How SSRTGI technology works, we will describe in more detail in the next post, together with the release of UNIGINE 2.5. In the meantime, the effects device and their operation on different settings can be viewed in the Superposition game mode by enabling debug.

Music perfect for repetition

The music for all our projects (previous benchmarks and the Oil Rush game) was written by Misha Paralyzah, he also started the soundtrack for Superposition. The difficulty is that the benchmark is usually run multiple times, and the music should not bore the user, but at the same time it is an important component that forms the overall impression. Plus, the music should ideally fit into the spans of cameras in order to maintain the pace of what is happening.

We did not have the opportunity to record the symphony orchestra live, so Micah collected the soundtrack in his home studio, with just a good base of virtual instruments, a midi-keyboard and many years of experience. You can listen to the result yourself – the stringy cello picks up the measured sounds of the piano, and gradually electronic notes intertwine with them, when the force of science defeats gravity and time on the screen.

All pain manufacturers

Earlier, we mentioned that one of the advantages of benchmark production is close work with developers of graphic processors. On the one hand, this is a cool perk: direct contact with engineers and access to the hardware of tomorrow. On the other hand, the project adds a large layer of work.

The first pre-pre-alpha alpha was given to vendors before everyone else, back in November. But they fell into an unsuccessful period: then their laboratories were busy with tests of pre-Christmas games. “Let go” of manufacturers closer to March, but all at once. We were alternately and simultaneously shot at from three sides by the test results of the laboratory by NVIDIA, AMD, and Intel. And it seemed to both of them that it was their Superposition video cards that somehow underestimated. We were only interested in optimizing things critical for users. Due to the prolonged silence of the vendors, a large chunk of this work has been in recent weeks.

GPU manufacturers have suffered. “Over the weekend, your settings have changed three times!” – Some were indignant. “Our video cards are much better, you have some kind of wrong benchmark!” Others were indignant.

After refinement and optimization, through a long correspondence, we reached a balance: the benchmark still did not suit all manufacturers equally, but at a minimum. But the benchmark had everything the user needed: an accurate measurement of the performance of a wide variety of video cards.

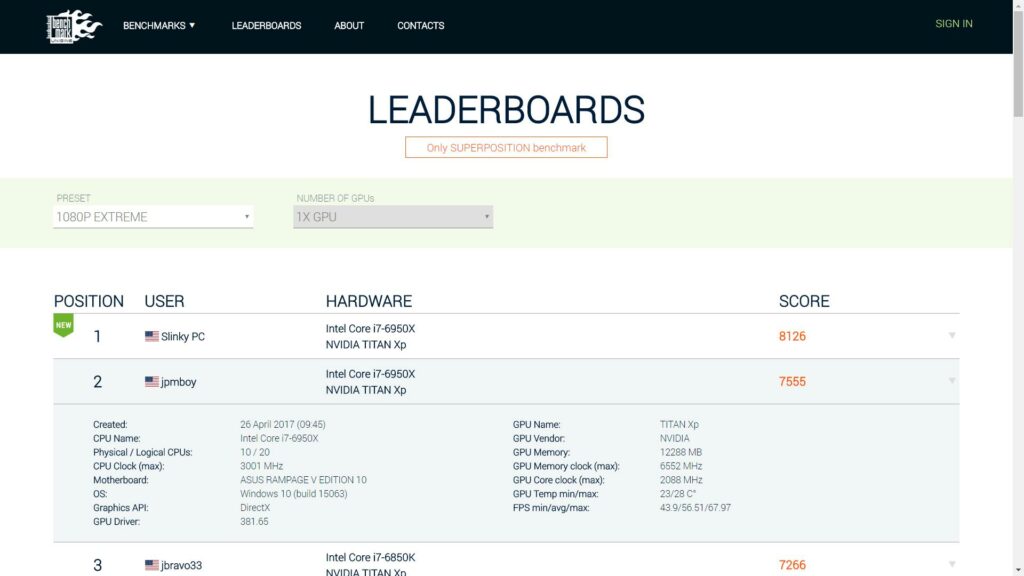

Leaderboards? Leaderboards!

Under each news about UNIGINE benchmarks, screenshots with results are sure to appear. At every self-respecting forum of overclockers and computer hardware threads with results grow, for example, Valley has an epic thread with 1300 pages. And we decided: since we are already doing the fifth benchmark, it’s enough to sit on other people’s forums. It’s time to start your own leaderboards ! We suddenly stumbled about them. The whole process of producing and releasing the benchmark from and to has been known for a long time, and it was easy to plan it. But the development of leaderboards was hidden until the time by the fog of war.

Hooray, release! Oh, wait …

We postponed the release to the end of the first quarter, and literally a few days were not enough to meet the deadline. The benchmark must be done immediately with high quality, release patches and versions 1.1.1., 1.1.2. no way: the results will float. The product should be immediately ready and stable. So that both our engineers, and vendor laboratories, and beta testers could test the benchmark to the maximum, we moved the release to April 6th.

On the morning of April 6, it turned out that the world was waiting. “I specifically took the day off because Superposition is coming out! When to download? ”- James from UK wrote. “Already on April 6! Where is the benchmark? ”Wrote Bruce from the east coast of the USA. “I started this thread in advance because Superposition will be released today. In the meantime, I’ll beautifully design the theme! ”- wrote on overclocking forums. “How are you doing? Soon? But will you write to me? ”James wrote again. Then Bruce. And Steve. And Marco. And a few dozen users through all possible communication channels.

A couple of weeks before the release date, we realized that we underestimated the amount of work regarding the launch of the new site with leaderboards and the entire back end associated with it. We chose the most critical: launch a new site with benchmarks, download from it, authorize users, a payment system, and most importantly, collect the results into a database from which the tournament table will then be formed. Plus dock the front end with the back end.

About the evening of the first attempt to release, we shot a small but dramatic video:

On the night of April 6-7, the Superposition benchmark itself was ready and hands were removed from it. But the release-critical part of the back-end and front-end still refused to work as it should. By willful decision, the release was moved to April 11th.

Over the 4 days, the frequency of commits on the web has tripled. Twice the hero of the detector and back-end Tolya plannedly (not because of the release!) Went to the hospital, from where he never stopped committing, and only anesthesia stopped him. For an hour. Web programmer Lenya also stopped working. Stopped leaving QA.

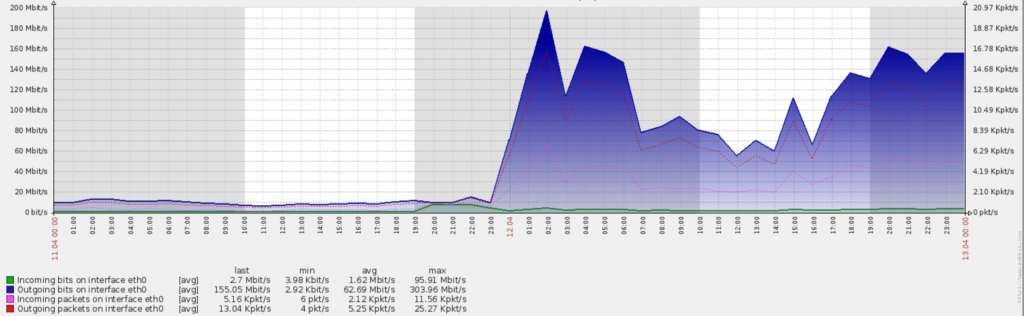

Here you need to go back a little to the drama with Steam. The volume of distributions distributed on this release was significantly larger: the Superposition build weighed 3 times the last Valley. And those who want to quickly download the new UNIGINE benchmark have also increased since then. We counted on Greenlight and Valve servers, which would distribute files without problems. And they were not at all prepared to give out a couple of dozen terabytes of traffic per day. Yes, we are aware of torrent, but a significant part of users do not use them for ideological reasons.

We needed a quick solution so that everyone could download the long-awaited benchmark so that our servers did not fall and that it would not cost the company astronomical money. Sergey, our IT director, turned gray by 10% while his department was looking for how to do this.

As a result, we came up with a scheme: buy a bunch of small hosting services for $ 5-10 and scatter our files on them. To redirect people who come for benchmarks to these same hosting and mirrors in any order, and so offload our servers. As a result, we made 11 mirrors for a budget of 3,000 rubles. This is what the traffic of only one server looks like on the night of release. We distributed part of our servers with restrictions, so as not to lie down. Part – from mirrors that periodically fell and turned off. The second Sergey from the IT department wrote a separate utility with 150 lines of code, which once a minute monitored the state of the mirrors, turned off the “dead” ones, and when they came to life, included them back in the distribution. To have time for the release I had to choose priorities: launch a new site

, prepare everything for the distribution of builds, configure the collection of user results in the database of future leaderboards.

The demands from web developers suddenly dropped – we would have 32 GB of RAM on the server. All other UNIGINE web-projects were not tailored for highload: for each site only a gig-two RAM was required. Therefore, a request of 32 GB of RAM for one site was extremely unexpected. The IT director turned gray by another 10%, but still managed to buy and configure the server in just half a day.

By April 11th, we had a critical back-end and front-end part ready for launch. Before the sun had risen over California, we had already finished the entire invisible part “under the hood”. And after the final tests and three “final” versions of the final build, on April 11 at 23:00, we posted Superposition to the download. And finally exhaled: the release happened.

Moving the release turned out to be easier than canceling the cake ordered in honor of it. So the cake came to us on April 7th. The team of artists is tired, but happy: their work on the project has definitely ended.

What did not finish?

Most of the questions from the very first post about the benchmark were asked about Vulkan and DX12 support. Vulkan has a promising future, but the API is not yet stable on all platforms. DX12 only works on Windows 10, and not all users of our benchmarks (which we know for sure thanks to the detector) have moved to the top ten. Therefore, we postponed the integration of Vulkan and DX12 to the next versions.

Overclockers from around the world stockpiled liquid nitrogen in anticipation of Superposition.

By the release, we have not mastered the support of SLI and CrossFire. Physically, both work. But CrossFire gives graphic artifacts, and SLI does not affect performance and sometimes also gives artifacts, depending on the configuration. This is due to the peculiarities of the TAA (Temporal Anti Aliasing) and SSRTGI effects and the design features of multi-GPU interfaces. Textures for rendering each frame in SLI and CrossFire must be synchronized between two or more video cards, but the transfer speed is very limited by the bandwidth of the PCI Express bus. If the render goes at extreme settings of 4K and 8K, the texture size becomes even larger, as well as the amount of data transmitted via PCI Express. Therefore, often real-time graphics, where there are temporal effects, do not scale well in SLI and CrossFire.

On some configurations, there will be a performance gain – if SLI and Crossfire support AFR Friendly. Then the textures are not synchronized, each video card considers its frame, the bus is not loaded with the transfer of unnecessary information and the performance is growing (though in SLI, again, not on all configurations). But due to the lack of synchronization, graphic artifacts appear.

How to stabilize this for all configurations, we did not invent for release. There are options on how to defeat this: reduce the number of synchronized textures, compress data, try to change the method of synchronization, and generally abandon temporal effects. But this task is not fast, so the optimization for multi-GPU has moved to future versions of Superposition.

Superposition conquers the world – a photo from Gamers Assembley in France, the largest European game festival

Leaderboards with all the front-end and back-end completely overpowered and launched 3 weeks after the release. Overclockers with liquid nitrogen have already been registered in them on the top lines – it is quite difficult for an ordinary user to catch them, but you can try!

Dry total

In fact, in a post not all dramas are narrated and not all heroes are mentioned. The entire saga would have taken a couple more posts. And, although it was not without fails, one cannot just take it and not be proud of the results of the year of work that passed from the beginning of the production demo to the release of the Superposition benchmark.

- We made the most beautiful benchmark in the world.

- Artists pumped so that they were surprised to themselves.

- Modified SSRTGI technology in the engine.

- Finally launched the online service.

- Learned to determine the iron of all varieties much more accurately.

For the first day, we collected 13,000 results in the database. At the time of publication of the post, Superposition was installed more than 100,000 times and the installation pace has been steadily maintained. In total, the work went into 6100 commits, 1437 of them in the last 90 days before the release.

The code is written (not counting engine modifications), in the lines:

- C ++: 41952

- UnigineScript: 10000

- PHP: 69000

- JavaScript: 4000

In the final assembly of 900+ objects and 400+ textures.

Collected RC versions – 7.

Burned in the name of the release of video cards – 1.

And finally, on the day of release, many reviews and articles about Superposition. All the top popular world portals about hardware wrote about the release of the benchmark, for example, Tom’s Hardware , TechPowerUp , VideoCardz.com and the native IXBT . Video reviews from users and video bloggers began to come out .

You can evaluate the result yourself by downloading Superposition. Despite the fact that at the maximum settings the benchmark is able to bend any modern system, thanks to various quality presets you can run it on a computer 4-5 years old.

Or look into the future of real-time 3D graphics by watching the video in maximum quality and in 8K resolution. This mode is also in the benchmark, but not all top video cards can withstand it:

All beautiful graphics and powerful hardware. See you soon on our blog!